In recent years, giant steps have been made in translation software. Just think how often you use Google Translate. You can use it not just on your computer, but thanks to its picture recognition abilities, to translate menus and street signs when you’re on holiday abroad.

Yet interpretation is still left to human interpreters. With the rapid globalization of Japanese society, there is more need than ever for multilingual interpretation. In particular, people want simultaneous interpretation that will enable real-time communication in business discussions and negotiations, and at international events.

Now a consortium of some of the biggest names in Japan’s IT and telecom sectors has come together to take the next big step on from translation software such as Google Translate: simultaneous interpretation.

Among the companies developing the necessary software are private sector companies like Toppan Printing, the National Institute of Information and Communications Technology, Mindward, Intergroup, Yamaha Corporation, Fairy Devices, Sourcenext, and KDDI. It also includes public sector giants like NTT East.

The Consortium for the Promotion of Advanced Multilingual Translation Technology has already developed sequential interpretation technology. This is already available, but it has its drawbacks. Sequential interpretation technology only translates what the speaker says when he or she stops speaking. This hinders the flow of a conversation and makes for a frustrating experience, as the speaker has to stop and wait for the software to translate what’s he or she has just said.

That’s why the consortium wants to go a step further and develop simultaneous interpretation technology. The new technology will be able to translate what the speaker at the same speed that he says it, without interrupting the flow of the conversation. This will make interpretation faster and smoother.

The consortium plans to research and develop simultaneous interpretation technology by using AI to break up long sentences into shorter, more easily translatable chunks. It also hopes to make the existing interpretation software more accurate, by using various information sources and taking into account factors such as overall context and situation recognition.

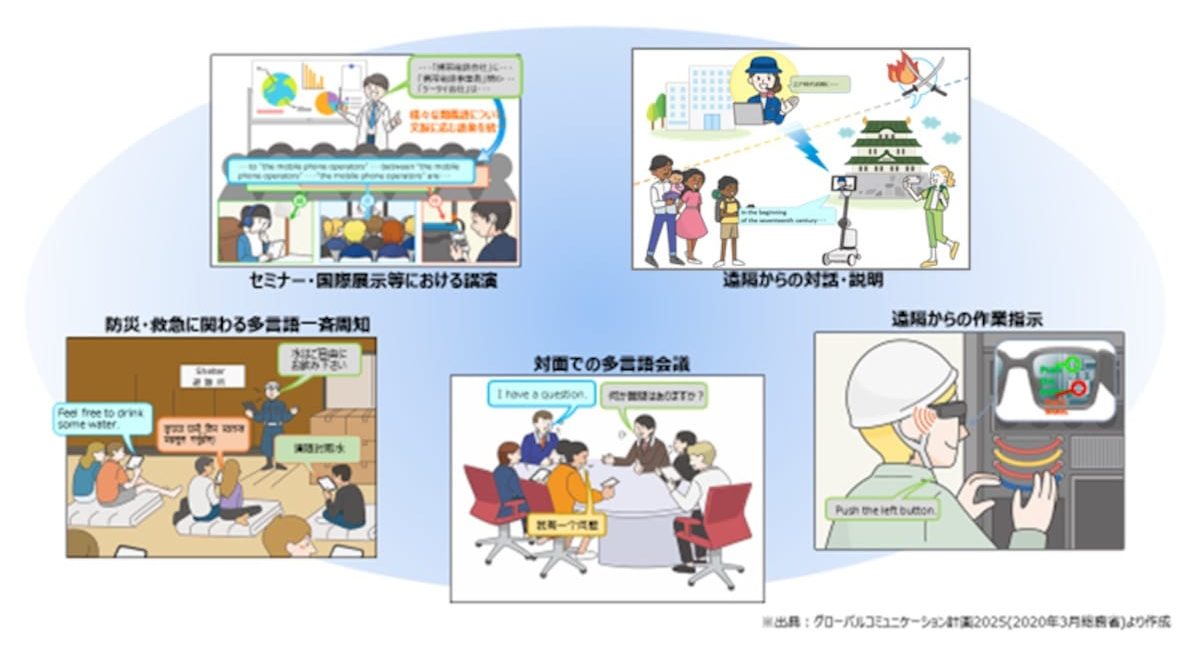

The consortium envisages that the software will mainly be used in business situations. It will make it possible to have meetings – whether in real time or online - with partners and colleagues who don’t speak your language without the need for a human interpreter. Another use would be interpreting instructions for the benefit of workers at remote production sites. This could be via a wearable device, or as a smartphone app.

But there are many other places where the new technology is likely to prove popular. One is in museums, enabling visitors to automatically understand what the museum guide is saying. Another is in disaster prevention facilities, explaining disaster prevention guidelines to non-native speakers.

There are also likely to be multiple uses for the new technology in schools and universities. You’ll be able to listen to lectures and attend online conferences even if you don’t speak the language of the country it’s being held in. In situations in which teachers and parents don’t speak the same language, they will be able to communicate with each other fluently.

In addition to translating what someone says at the same speed that they say it, the consortium is hoping to use voice recognition technology to track what someone is saying, even when there is background noise. This will come in very handy on factory tours, at exhibitions and at sightseeing spots. And the new technology is also expected to be able to recognize and interpret each voice individually in situations in which people talk all at once, such as a lively panel discussion.

As well as making communication smoother, and life more convenient, simultaneous interpretation is likely to have a big impact in improving productivity at work and allowing workers to do their jobs autonomously, without the need for human interpreters.

Read more stories from grape Japan.

-- Turn yourself into a walking Ukiyo-e masterpiece with these Japan inspired buffs

-- Illustrator Natsuya’s never-ending summer dreams of pools, popsicles, and aquamarine

-- Breathe in the smell of spring with Japan’s sakura scented masks

- External Link

- https://grapee.jp/en/

Take our user survey and make your voice heard.

Take our user survey and make your voice heard.

6 Comments

Login to comment

divinda

Would be curious how these (apparently not polyglot) consortium developers they get around the fact that some languages have verbs mid-sentence, some have it at the end, while others attach tenses to the end of verbs in order to denote an unspoken subject, and lots of languages have no verb tenses at all and instead use time words/phrases which can come at any point in the sentence.

Perhaps this is why "Sequential interpretation technology only translates what the speaker says when he or she stops speaking."

proxy

Translating Japanese to languages like English is really hard. The lack of a subject in most spoken Japanese makes it very hard to translate using AI. I have never seen any electronic translation that can translate "Hiroshima ni itta" to "My sister went to Hiroshima." The subject "my sister" is understood in Japanese but electronic translation really struggles trying to figure our who the subject is.

Ascissor

I'll only be impressed when the AI gives translates "something-something整備 something-something検討something-something充実something-something実施" as "I'm spurting out ambiguous claptrap in order to evade responsibility".

geronimo2006

Good luck with that. For AI to be able to achieve simultaneous interpretation accurately it would require AI to understand context (social, historical, political etc.) and be a mind reader (to be able to get intention, nuance etc.) as well as be something of a fortune teller (to know future goals etc.). That's why Google translate is good at translating simple single sentences but becomes garbled beyond that in most complex situations. The human brain with its senses is far superior for now. When AI does gets that powerful one day it probably won't be bothered translating for us because it will already be running everything.

Nihonview

Goodbye Native English teachers